Across many TechDDs I’ve done this year, a clear pattern is emerging. More and more code is written by AI - sometimes entire features landing as PRs overnight. Engineers spend less time typing and more time prompting and reviewing code.

I’m also introducing AI-enhanced development inside an established engineering team as a partial CTO. And the picture is very mixed. Some things speed up dramatically, while in other places those gains disappear again. I’m iterating the process week by week to remove these speed bumps and understand what truly drives or blocks efficiency.

In a way, this is about watching modern engineering best practices evolve under AI - after years of relative stability.

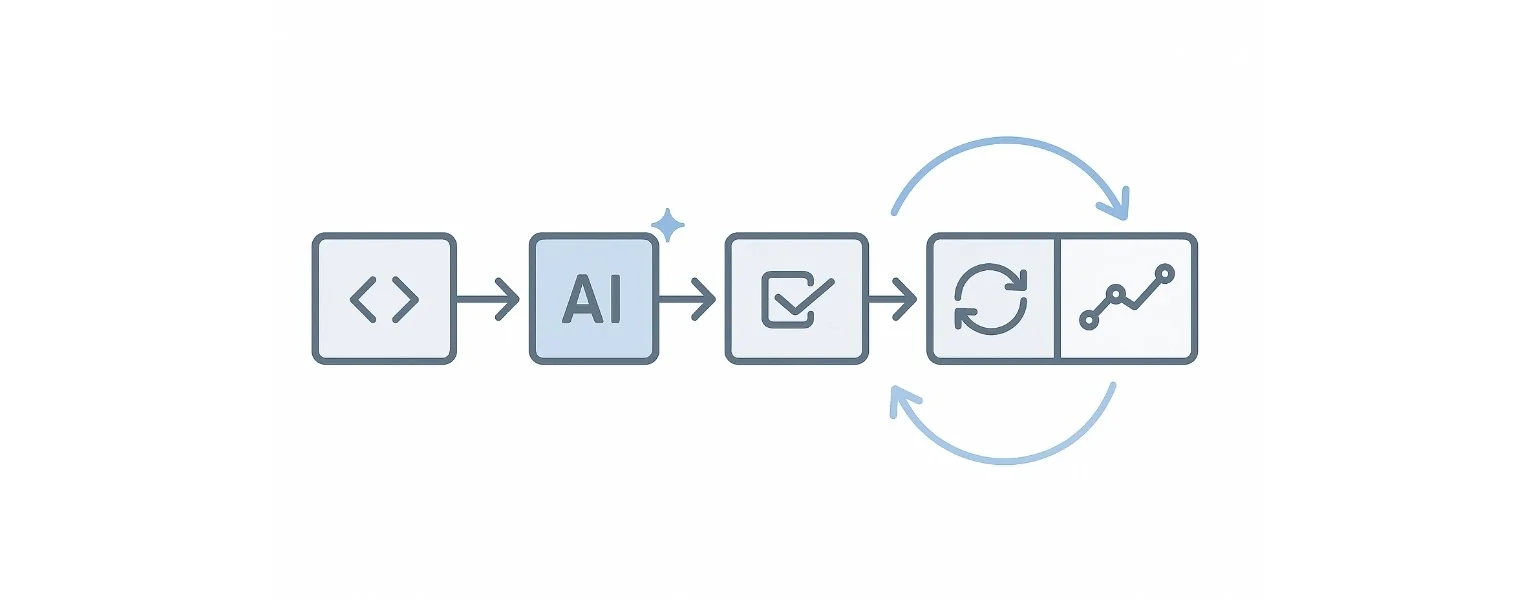

As AI floods teams with more code, the value of individual lines decreases. What seems to matter far more is the architecture and the guardrails around it. Automated tests, CI/CD, logs, and monitoring or tracing become the backbone that lets AI move safely. When these are solid, teams see real acceleration. When they’re loose and the trust is low in AI code, any speed gain evaporates quickly.

The tooling layer is evolving just as fast. Cursor rules and similar mechanisms turn team conventions, coding guidelines, and architecture patterns into something executable, helping AI operate within the intended boundaries rather than drifting away from them.

There are plenty of strong opinions out there - both optimistic and sceptical. But without looking closely at how teams actually integrate AI into their SDLC, it’s hard to compare experiences in a meaningful way and have a proper discussion.

I don’t have the answers yet. This is the start of a thinking process I want to share and have scrutinised - to hear other views and learn from real experiences. That’s the fastest way forward for all of us.